Dev Retro 2022: Reviewing 2 Years of Professional Open Source Development Experience

A review of my time so far at Rapid7 as a Metasploit developer as I look towards how to grow myself into a senior researcher.

Table of contents

Introduction

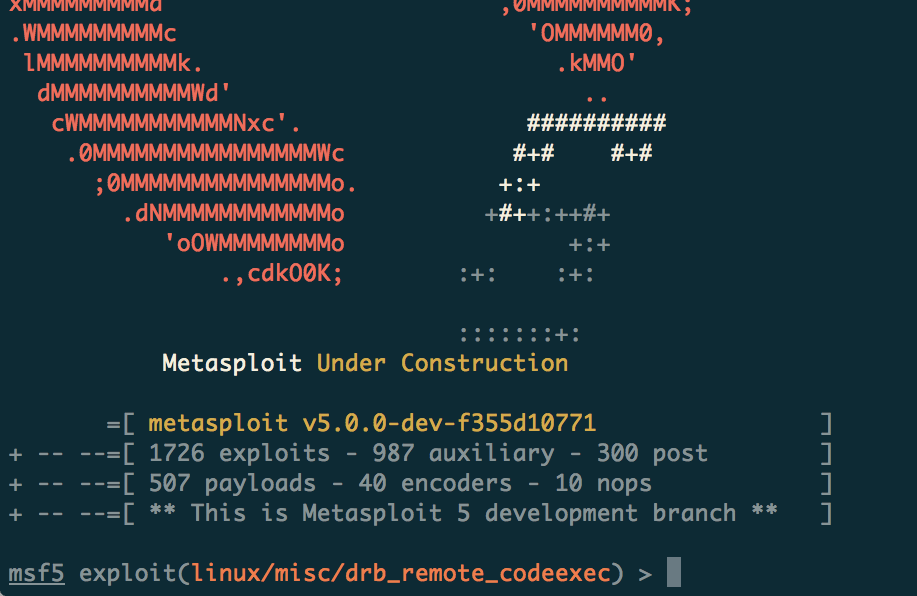

Welcome! My name is Grant Willcox and I work at Rapid7 as an open-source software (OSS) developer on Metasploit Framework, an open-source penetration testing framework that helps security professionals better audit systems by providing a repository of offensive security tools, along with a centralized console interface, that can be used to test the security of systems.

I have worked at Rapid7 for 2 years now and am approaching my 3rd year now. Working with Metasploit has been my first experience developing open-source software. Before Rapid7 I worked as a pentester in the UK for NCC Group and as a professional exploit developer for VerSprite and Exodus Intelligence. These jobs provided me with a lot of my foundational programming and security skills, but I always felt like I didn't quite have the feedback loop that comes with working in the public.

Working in public has been quite an experience and a shift in mindset, so let's look over some of the things that have come up and some of the lessons that I have learned during this transition.

Lessons Learned

Lesson 1: Make Your Code Reviews To The Point And Consistent

I know this gets talked about a lot but one of the biggest issues for me honestly was being consistent in my code reviews. I had several issues throughout the year where I would leave very long reviews consisting of over 30 comments on a pull request, only to then get a reminder from my manager that many comments won't help the contributor improve.

The interesting part about this for me was realizing that whilst I was trying to help people by giving complete feedback on everything to them, ultimately I need to step in as a maintainer and do the tasks to improve their PR where possible.

Often this means instead of commenting on the spelling mistakes or the minor case where the code might need to be refactored for a few lines, just stepping in and doing that yourself.

There is a great explanation of this that I found somewhere on the internet (for the life of me I can't find the source now) which stated that the first few times someone contributes, you want to get them used to contributing and take all of the responsibility of fixing their code on yourself. The idea is that they are contributing new code to your project and you want to get them to keep contributing new code by making their initial submissions as easy and smooth as possible. Additionally, there is a good possibility that they might not return to contribute again after their first or second submission, so you want to increase these chances as much as possible.

Only after the contributor has made their 3-4th submission, should you slowly start getting them to understand more about your contribution guidelines and good coding practices, and start helping to mentor them into being a better developer. The reason is that it is only at this point that they have shown that they are committed to helping to improve the project and are likely to continue contributing. Before this, it is very hard to tell if they are going to stick around or not, and as maintainers, we often have limited time and resources to do a lot of tasks, so it is in your best interest to make sure they are likely to continue submitting to your project before making these sorts of suggestions.

Lesson 2: Open Source Projects Live and Die By Their Contributors

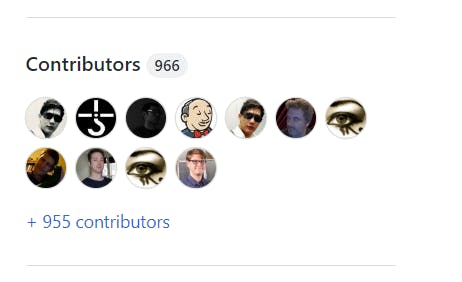

At the time of writing, Metasploit Framework has 966 contributors. This includes some bot accounts and the like so most likely the number is closer to about 920 or so, but still, this is a lot of people! You can see the list of contributors for any project on GitHub as well as stats on these users by going to github.com<company or contributor GitHub name>/<project>/graphs/contributors and then viewing the list there. You can also see the pulse of a project by visiting github.com<company or contributor GitHub name>/<project>/pulse.

Despite all of these contributors, you may be asking yourself, like I did, "Okay I see these large numbers, but how does this tie into my daily work and priorities?". For the longest time, this didn't make sense to me until someone showed me a graph of our biweekly release cycles and a breakdown of how many of those contributions were made via Rapid7 employees vs external contributors. The number of contributions from external contributors was found to hover at around 50%, and sometimes even exceeded that.

To put that in perspective, that means that whilst Rapid7 develops and maintains Metasploit, on average, over half the code is externally contributed. Simply put, Metasploit would not exist without the help of the security community.

Once I saw this I realized that all of the efforts behind bringing in new contributors were essential work. Any contributor to the project can reasonably be expected to move on after 1-3 years of contributing. Very few people tend to stick around longer than that and those who do tend to be extremely valuable.

Therefore open-source projects must make it a goal to constantly try to bring in new contributors. This helps to keep the number of people contributing to the project steady at the very least, which is an important factor when judgment calls need to be made r.e resourcing and availability. If the number of contributors is going down, the project will have less net new content going in, and the core team will need to potentially shelve some of their larger plans to ensure they are meeting their core project needs. Conversely, if more contributors are actively contributing to the product, there will be a greater influx of content, which will allow the maintainers more flexibility to work on larger features, knowing that community contributions are helping to keep enough content flowing in that these bigger projects won't disrupt operations.

Lesson 3: Projects Should Be Prioritized by Impact on the Project and/or the Company

This was a hard one for me to understand, but essentially this boils down to this: there are going to be projects that you will be interested in that have value to the company and your project but which do not have the necessary impact. What do I mean by this?

Well just because a project has value doesn't mean that it will change things for the better or actually "move the needle" so to say to positively change things in a significant manner. One of the biggest challenges as an open source maintainer is realizing there are a ton of good ideas out there but unless you can tie them back to something that will have a decent impact on the customer base and the company (assuming you are sponsored or are owned by a company like our project is), then regardless of how valuable you might perceive that item to be, the reality is your not doing your team or the company a favor by working on that project when there is more impactful work you could be doing.

For this reason, it is important to always evaluate projects from the following perspectives in my view:

What impact does this have on the company? Does this tie into some objective the company has set on its roadmap or an overall initiative the company is concerned about?

What impact does this have on the product's users? Are users able to do something they weren't able to before? Does this solve a major bug that has been preventing users from using the product as intended?

Is this going to positively move the needle forwards in a significant manner or is this just a minor enhancement? Put another way, are your feelings driving this decision, or is there actual data behind the change that backs up your suggestion and shows it will make a significant impact?

Improvements for 2023

Increase Flexibility and Forgiveness on PR Reviews - Aka Increasing Empathy

Right now one of my key issues is not having enough flexibility and forgiveness for contributors. This comes a lot from my high standards for myself and my code which I have ended up inadvertently imposing on others.

One of my goals here is to start appreciating the fact that a lot of our contributors are not professional coders and that there are also quite a range of skill sets amongst our contributors. In the next year, I'm aiming to improve on this further and reduce the number of comments that I make on contributor's code and increasingly start to sort things out myself.

I also would like to start reducing the time that it takes for me to review contributors' PRs. Part of this comes from time management but a lot of it can also come from my fear of feeling like I don't know the code well enough to give a proper review on it. Whilst this may be true, not giving a review at all is a disservice to those who contribute to Metasploit Framework and only serves to slow things down. At the end of the day if I make a mistake I can learn from it, but if someone stops contributing cause they found the review process takes too long, then it is much harder to replace that contributor who left, and that could likely have a knock-on effect for other users.

Identifying If Broken Features Should Work or Not

This one is a tough one for me to digest at times. There are a lot of issues reported to Metasploit Framework, and almost certainly more than the core team of 6 programmers can reasonably deal with within a year. However not all of these issues are bugs, and even when they are bugs, they still need to be triaged to determine their impact appropriately.

One of the mistakes that I tend to make is assuming that just because something is broken, it should work as the user described. This is not always the case, since there are also times when the user may not fully understand the intent of the implementation and may be trying to use the software for some purpose it wasn't designed for. Taking this into account is a core skill for maintainers to ensure they are focusing on the most relevant items, and is something that will I will need to improve for next year as I work on prioritizing my work further.

Increasing Ruby Knowledge

This one has been something that I have gone back and forth on for a bit. This last year I have vastly improved my Ruby knowledge but there are still advanced programming concepts in Ruby that will trip me up. Additionally, I have frequently found myself banging my head against design decisions that were made in Ruby and Rubocop that I was not aware of when writing code.

Therefore, I think one of the key goals of this coming year is for me to learn more design patterns in Ruby to ensure I am using the right design concepts, and to also learn more about the design decisions of Ruby so that I'm not writing code that violates these principals, thereby potentially increasing my coworker's review time.

Increasing Debugging Skills With Debug.gem

If you haven't already seen Stan Lo's talk on debug.gem, and you are a Ruby programmer, you are seriously missing out. Here is his talk from RubyKaigi, it's highly worth watching. It covers details about how to use the new debug.gem using a bunch of practical examples and working from the very basics to some more advanced concepts that should help you solve trickier problems, all in the span of little over 26 minutes:

After going through this talk I stumbled across his posts at https://st0012.dev/whats-new-in-ruby-3-2-irb and later at https://st0012.dev/ruby-debug-cheatsheet that show how one can use IRB 1.6 along with debug.gem to do some very nice and powerful debugging in Ruby using a lot of common debugging operations that were previously not possible in Ruby.

Ruby historically has been rather difficult to debug, and with this new debug.gem we are finally getting some much-needed debugging capabilities that put it in line with tools like WinDBG from Microsoft.

I'm looking forwards to learning more about this tool and figuring out common commands and shortcuts to integrate it into my workflow more.

Improve Root Cause Analysis Skills

This is along the lines of debugging skills but learning how to accurately determine the root causes of bugs and then fix them is something I think I will always need to improve on. I'm hoping to at the very least get more comfortable with this in 2023 and utilize some of the skills I learn with debug.gem to speed this up.

Ultimately I will need to start diving into creating more of the fixes though, as this will allow me to broaden my understanding of the Metasploit code base and start finding patterns that can speed up my code reviews.

Conclusion

Thanks for reading! If you have any comments or suggestions, I'd be happy to hear about them in the comments below. I'm always looking for ways to improve so all feedback is welcome!

Happy holidays!